Introduction

“Dancing robot” seems to be a contradictory term itself. While “robot” always has something to do with cold machines doing dull and repetitive tasks with no emotion, variation, or interaction, “dance” is a form of performing art which expresses feelings, delivers creativity, and interacts with the music. Yet, the emergence of humanoid robots and pursuit of artificial intelligence have led to the aspiration of equipping robots with human capabilities to feel, think, and act. Dancing Robot, therefore, has found its consistency in both the robotics and the art field.

With this project, we aim to explore the term "creativity" at the intersection of robotics and music, e.g., the dance generation process driven by online music. A demo of our performance is shown in the following. This piece is performed by NAO in the virtual environment of CoppeliaSim (Vrep). Full screen is recommended!

Demo

Approach

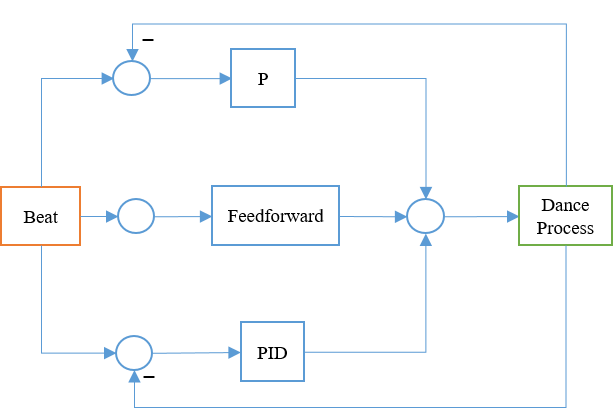

Our framework of the real-time dance generation system is composed of three subparts: dance generation, music following, and dance control. For dance generation, we extend the use of unit selection for the generation process of robotic dance sequences; for beat tracking, we apply Madmom to distinguish the beat onset of the background music in real-time; for dance control, we develop a feedback loop with PID control to synchronize each dance motion unit to the background beat onset. The following diagram illustrates the division and communication of each component.

Overall Diagram

Dance Generation

Unit selection was first introduced in text-to-speech (TTS) systems, and its intuition is that new, intelligible, and natural sounding speech can be synthesized by concatenating smaller audio units that were derived from a preexisting speech signal [Hunt and Black, 1996; Black and Taylor, 1997; Conkie et al., 2000]. In Bretan et. al.'s work, unit selection was first appllied to generation of monophonic music, where music bars are selected iteratively by the ranking of semantic relevance (content similarity) and concatenation cost (continuity). Inspired by this, we propose to generate dance sequence by unit selection also through two criterion. One is the semantic relavance between two dance motion units, and the other is the stability of the robot when it transitions from the previous unit to the new one. In this way, we generate dance sequence in an improvisational manner, where the dance motions are both natual-looking and performable by a humanoid robot.

Music Following and Dance Control

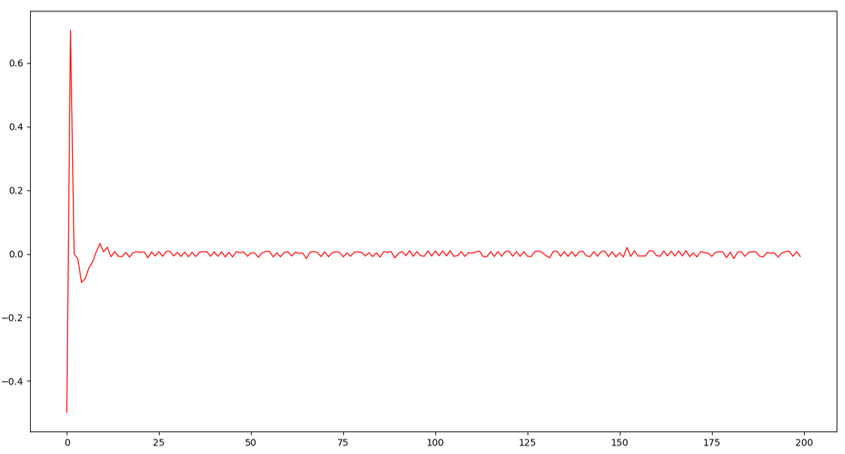

We apply Madmom to track beat onsets for real-time music input, and a feedback loop with PID control to synchronize the generated dance to the music. The block diagram of our PID control is shown as following. The graph on the right demonstrates our synchronizing performance, with its horizontal axis representing time and vertical representing tempo error.

Music Following Control Loop

Music Following Error-Time Graph